Brief History Of Computer

History of computing A computer might be described with deceptive simplicity as an apparatus that performs routine calculations automatically Such a definition would owe its deceptiveness to a naive and narrow view of calculation as a strictly mathematical process For historical developments, see the section Invention of the modern computer. Mainframe computer During the 1950s and ’60s, Unisys (maker of the UNIVAC computer), International Business Machines Corporation (IBM), and other companies made large, expensive computers of increasing power.

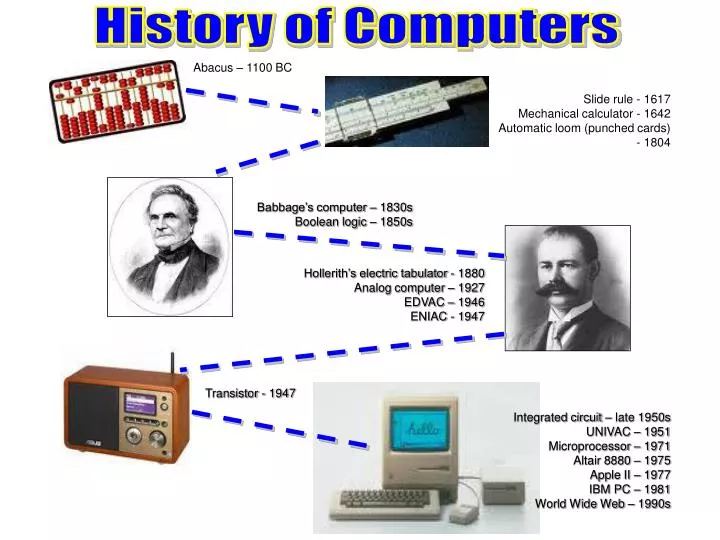

Brief History of Computers The history of computers goes back as far as 2500 B C with the abacus However the modern history of computers begins with the Analytical Engine a steam powered computer designed in 1837 by English mathematician and Father of Computers Charles Babbage The Modern History of Computing; A Chronology of Digital Computing Machines (to 1952) by Mark Brader; Bitsavers, an effort to capture, salvage, and archive historical computer software and manuals from minicomputers and mainframes of the 1950s, 60s, 70s, and 80s "All-Magnetic Logic Computer". History of innovation. SRI International. 16 .

Brief History Of Computer

The concept of modern computers was based on his idea 1937 A professor of physics and mathematics at Iowa State University J V Atanasoff attempts to build the first computer without cams belts gears or shafts 1939 Bill Hewlett and David Packard found Hewlett Packard in a garage in Palo Alto California A brief history of computers. A brief computer history with timeline youtubeComputer history timeline pdf computers timeline of computer .

History Of Computers Computer History Punch Cards History

History Of Computer

A brief history of computers by Chris Woodford Last updated January 19 2023 Computers truly came into their own as great inventions in the last two decades of the 20th century But their history stretches back more than 2500 years to the abacus a simple calculator made from beads and wires which is still used in some parts of the The universal language in which computers carry out processor instructions originated in the 17th century in the form of the binary numerical system. Developed by German philosopher and mathematician Gottfried Wilhelm Leibniz, the system came about as a way to represent decimal numbers using only two digits: the number zero and the.

Completed in 1951 Whirlwind remains one of the most important computer projects in the history of computing Foremost among its developments was Forrester s perfection of magnetic core memory which became the dominant form of high speed random access memory for computers until the mid 1970s In 1936, at Cambridge University, Turing invented the principle of the modern computer. He described an abstract digital computing machine consisting of a limitless memory and a scanner that moves back and forth through the memory, symbol by symbol, reading what it finds and writing further symbols (Turing [1936]).